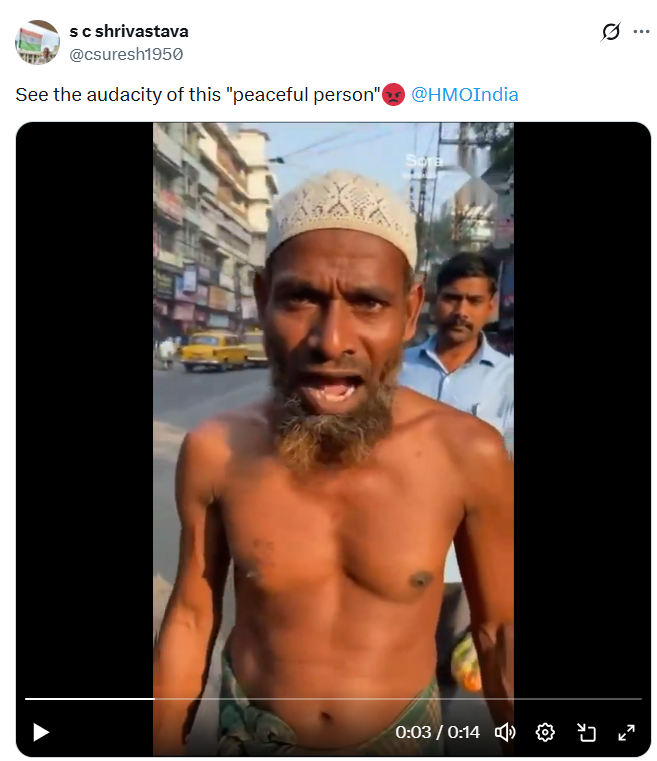

A 14-second video has gone viral on social media. In it, a Muslim man is purportedly saying, “I’m from superpower Bangladesh. I am living in Kolkata, and I fully support Mamata Banerjee. We Bangladeshis will soon rule West Bengal. Remember my words, brother.” Users are sharing the video, claiming it to be a real incident. They are also targeting the West Bengal government and the Muslim community, writing, “See the audacity of this “peaceful person”” (Archive Link)

Evidence

1. Visual inconsistencies in the viral clip

A close look reveals an expressionless, frozen-faced man in the background, an anomaly common in AI-generated scenes where secondary characters lack realistic motion or emotion.

2. Presence of Sora watermark confirms AI creation

The top-right corner of the video displays the watermark “SORA”—OpenAI’s advanced text-to-video model. Its presence strongly indicates the clip was produced using AI tools rather than recorded in real life.

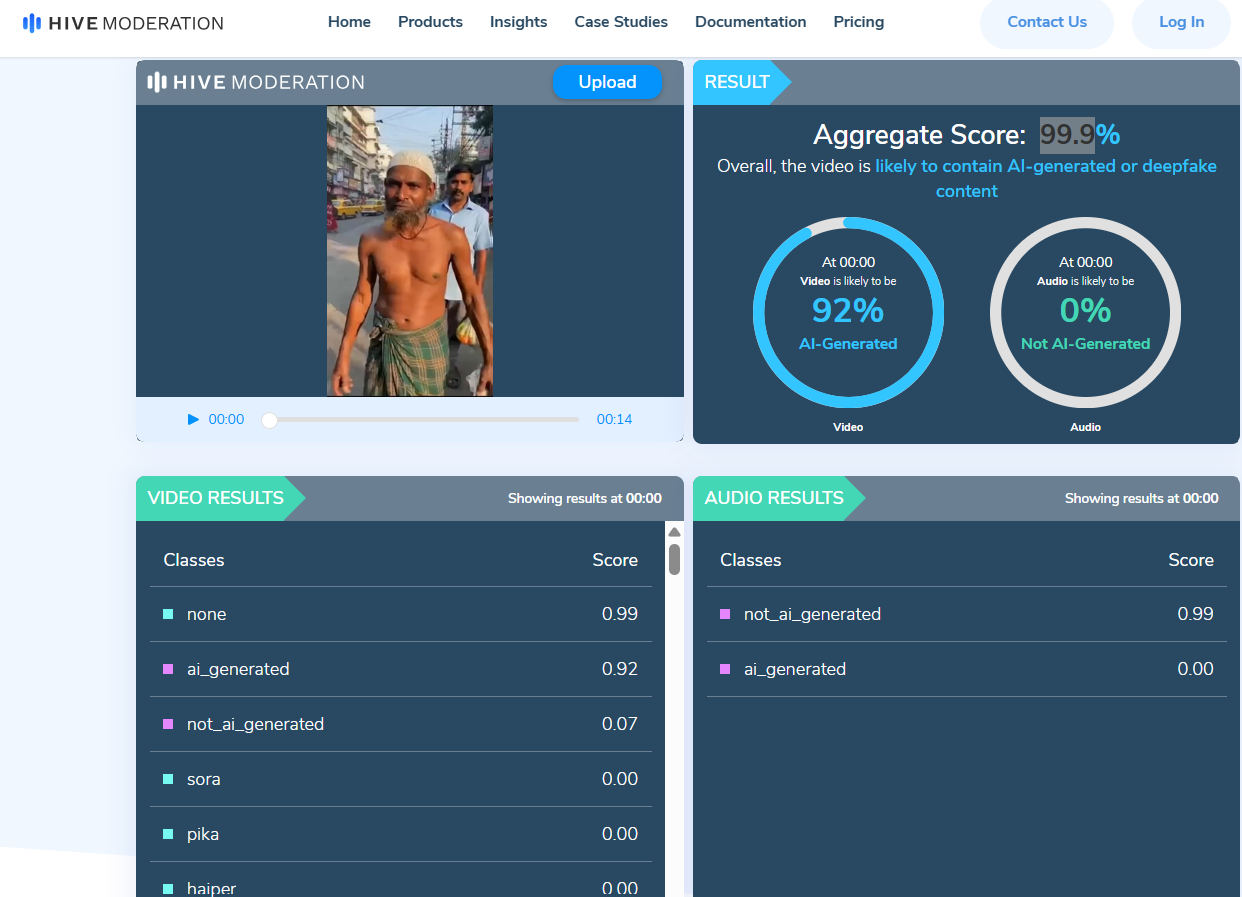

3. AI detection tool flags the video as synthetic

We analysed the clip using Hive Moderation, an AI-detection platform.

- Result: Above 90% probability that the video is AI-generated.

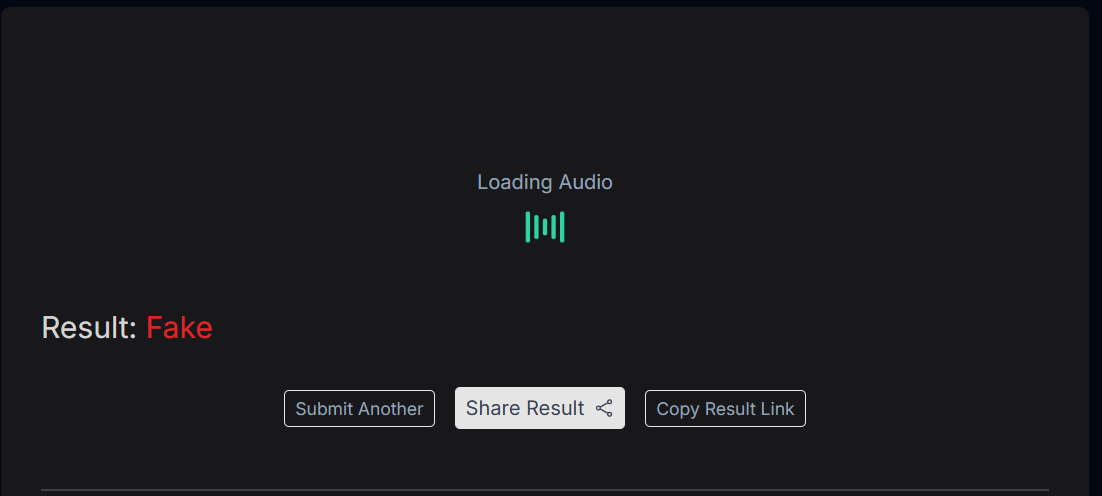

4. Audio is also AI-generated

The voice in the video was tested on Resemble AI’s audio deepfake detector, which conclusively marked the audio as “Fake.”

Verdict

Thus, it is clear that the video of a Muslim man saying that Bangladesh will take over West Bengal was made using AI.

FAQs

1. Is the viral video of the Bangladeshi man in Kolkata real?

No. Multiple tools confirm the video and audio are AI-generated.

2. What does the Sora watermark indicate?

It shows that the clip was likely created using OpenAI’s Sora text-to-video model.

3. Was the man actually speaking about ruling West Bengal?

No. The dialogue is synthetic and created by an AI voice model.

4. Why are AI-generated political videos spreading online?

They are easy to produce and often used to provoke outrage, influence opinion, or target communities.

5. How can viewers identify AI-made videos?

Look for unnatural facial expressions, irregular background movement, and visible watermarks. If unsure, rely on credible fact-checks.

Sources

Self Analysis

Hive Moderation Tool

Resemble AI Tool